Architecting a FaaS platform with Elixir and WebAssembly

Hobby Projects

During my university life I always kept tinkering with hobby projects whenever I had the time. With the first programming courses I started making little games which got me interested in design patterns and software architectures. Later on I moved to web dev and backend systems and went all in with the functional paradigm.

For my M.Sc. thesis I got into distributed systems and worked on a project on OpenWhisk and from there on I worked mainly on FaaS platforms… that’s why I talk about it so much.

Fast-forward some time I started fantasizing about creating a FaaS platform from scratch. I wanted to work on a BIG project. Usually all my hobby projects were somewhat small focused on learning a new language or framework. This time I wanted to make a real, distributed, scalable system.

Enter the PhD

I started my PhD with a project on function scheduling in FaaS using OpenWhisk as a base. At that point I wanted to have more control over the platform itself and know its internals. I talked about it with a colleague and we decided to start working on it.

The first thing we did was to choose the tech stack… we were divided between Elixir, Rust and Go (spoiler: we ended up using all 3 in different ways). Elixir won right away because the idea of making a distributed platform that can execute user-provided function on top of the BEAM was too good to pass up.

The actor programming model and distributed nature of the BEAM made it a perfect fit for our needs. We can just add nodes like nothing and the platform will scale horizontally.

Trying to apply good practices

We got something up and running relatively quick. The fundamental architecture of the system was inspired by OpenWhisk (since we had more expertise on that) where we have a “Controller” service (called Core) and a “Worker” service. The core handles the state and exposes the API, then chooses one of the workers to execute a function. With Elixir & BEAM it’s straightforward, the services can just talk to each other via messages.

The first wall we hit was how to actually run the functions’ code. In FaaS there is thing called a “runtime” which is the environment where the function runs. The typical case is a container with all the machinery required to receive some function code and run it. We had no idea how to make one from scratch so we got the idea of re-using the OpenWhisk runtimes and in the future replace them.

We went with a ports and adapters architecture, where the “core” of the worker was nicely isolated from those pesky side-effects. And everything else that interacted with the outside world was an adapter, including the communication with the Core service and the runtimes.

Furthermore, we wanted to have metrics and monitoring in place from the start. We added Prometheus to the mix and used it also to drive scheduling decisions. The Core checks the metrics of the workers to pick the best one. One last mention goes to Postgres, as the database to store the state of the platform.

Starting with OpenWhisk Runtimes

This choice was a win because we managed to use the OpenWhisk runtimes as blackboxes and it worked out of the box.

I already talked about OpenWhisk runtimes in a previous post.

The gist is that it is a container with a web-server that listens to a /init and a /run endpoint.

We just had to write a small adapter that would first send the function code with init, and

then call the run. With a deployed runtime everything worked like a charm.

Landing on WebAssembly

To get something up and running and experiment with real function invocations, this was perfect. It enabled us to quickly prototype and test the platform. Once the Core and Worker were in a better state we wanted to start exploring new ideas for runtimes. As already spoiled we went with WebAssembly.

With Webassembly things work a bit differently. Wasm functions are a compiled binary that can be directly run with a proper wasm runtime (“runtime” word overloaded), such as was wasmtime. Now, instead of having a typical “FaaS runtime” where the worker services sends the code to and runs it, we have a system like wasmtime that can run the wasm code directly and the worker can directly use it with an sdk.

Knowing that, we decided to enhance the worker to use the Rust wasmtime crate so the worker can just receive the wasm binary when needed and execute it. This was possible thanks to rustler, which enables to write Rust code that can be called from Elixir with Native Implemented Functions (NIFs). This means that the worker can call our Rust code to run the wasm functions, which in turn uses wasmtime.

The change was fairly straightforward. Once we had a working Rust library for the worker, all we had to do was write another adapter to use it. Internally we didn’t have to change anything since in reality the worker has no idea what’s running the function, it just knows how to call it.

Let’s not forget the CLI

Initially I said we used Elixir, Rust and Go. Elixir is the majority of the codebase, and Rust is used for the wasm code that the worker uses to run the functions.

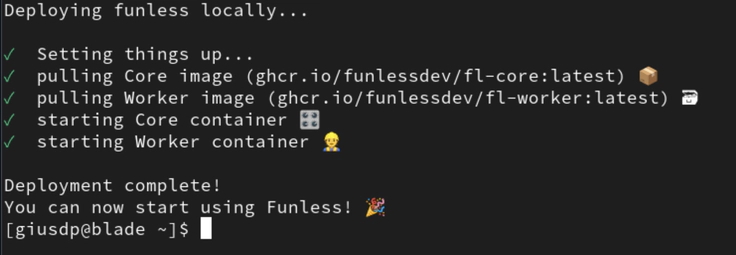

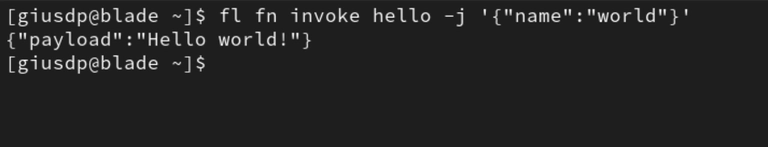

We also used Go, but for the CLI! With the CLI you can deploy the platform locally (it deploys the Core, the Worker, Postgres and Prometheus as containers), you can upload you wasm functions and invoke them.

A building block of the CLI is the go sdk that is automatically generated from an OpenAPI spec using a Github Action. It was pretty fun and useful, cause as a first job I went to work on the CLI for the startup I’m currently working at.

Personal Achievements

It is not at all a project made to compete with other FaaS platforms. It was a great learning experience and a way to apply what I learned during the years. I was already content with this kind of result and considered it an achievement.

Being in a PhD program, we talked about this hobby project within our research group and decided to write a paper about it. We went a bit more in depth on the architecture and the flow of the system (function creation and invocation) and did some benchmarks.

We ended up submitting it to ICWS, a big conference with a really good ranking and against all odds it got accepted! It was a great feeling to see that something that was born as a hobby project and a way to learn new things became a publication!